“I have given the record of what one man thought as he pursued

research and pressed his hands against the confining walls of scientific

method in his time. But men see differently. I can at best report only

from my own wilderness.”

This is a slightly expanded version of a presentation, given together with Tony O'Dowd of Kantan, who focused more on Kantan's accomplishments in 2016 earlier this month. The Kantan webinar can be accessed in this video, though be warned the first few minutes of my presentation has some annoying scratchy audio disturbance. Tony speaks for the first 23 minutes and then I do, followed by some questions after that.

2016 was actually a really good year for machine translation technology, as MT had a lot more buzz than it has had in the past 10 years and some breakthrough advances in the basic technology. It was also the year I left Asia Online, and got to engage with the vibrant and much more exciting and rapidly moving world of MT outside of Thailand. As you can see from this blog, I had a lot more to say after my departure. The following statements are mostly just opinions (with some factual basis) and I stand ready to be corrected and challenged on every statement I have made here. Hopefully some of you who read this may have differing opinions that you may be willing to share in the comments.

2016 also saw the arrival of production AdaptiveMT systems. These systems though based on phrase-based SMT technology, learn rapidly and dynamically as translators work. A company called Lilt has been the first to market and current market leader with Adaptive MT, but SDL is close behind and 2017 could present quite a different picture. ModernMT, an EU based initiative has also shown prototypes of their Adaptive MT. Nobody has been able to build any real momentum with translators yet, but this is technology is a very good fit for an individual translator.

2016 also saw Microsoft introduce several speech-to-speech related MT offerings, and while this has not gotten the same publicity as NMT, these speech initiatives are a big deal in my opinion, as we all know from Star Trek that speech is the final frontier for automated translation.

---- Loren Eiseley

This is a slightly expanded version of a presentation, given together with Tony O'Dowd of Kantan, who focused more on Kantan's accomplishments in 2016 earlier this month. The Kantan webinar can be accessed in this video, though be warned the first few minutes of my presentation has some annoying scratchy audio disturbance. Tony speaks for the first 23 minutes and then I do, followed by some questions after that.

2016 was actually a really good year for machine translation technology, as MT had a lot more buzz than it has had in the past 10 years and some breakthrough advances in the basic technology. It was also the year I left Asia Online, and got to engage with the vibrant and much more exciting and rapidly moving world of MT outside of Thailand. As you can see from this blog, I had a lot more to say after my departure. The following statements are mostly just opinions (with some factual basis) and I stand ready to be corrected and challenged on every statement I have made here. Hopefully some of you who read this may have differing opinions that you may be willing to share in the comments.

MT Dominates Global Translation Activity

For those who have any doubt about how pervasive MT is today, (whatever you may think of the output quality), the following graphic makes it clear. To put this in context, Lionbridge reported about 2B words translated in the year, and SDL just informed us earlier this month that they do 100M words a month (TEP) and over 20B+ words/month with MT. The MT vendor translated words, together with the large public engines around the world would probably easily make over 500B MT words a day! Google even provided us some sense of what the biggest languages if you look closely below. My rough estimation tells me that this means that the traditional translation industry does about 0.016% of the total words translated every day or that computers do ~99.84% of all language translation done today.Neural MT and Adaptive MT Arrive

2016 is also the year that Neural MT came into the limelight. Those who follow the WMT16 evaluations and people at the University of Edinburgh already knew that NMT systems were especially promising, but once FaceBook announced that they were shifting to Neural MT systems, it suddenly became hot. Google, Microsoft and Baidu all acknowledged the super coolness of NMT, and the next major announcement was from SYSTRAN, about their Pure NMT systems. This was quickly followed a month later by Google's over the top and somewhat false claim to produce MT as good as "human-quality" translation from their new NMT system. (Though now, Mike Schuster seems to be innocent here, and looks like some marketing dude is responsible for this outrageous claim.) This claim sent the tech press into hyper-wow mode and soon the airwaves were abuzz with the magic of this new Google Neural MT. Microsoft also announced that they do 80% of their MT with Neural MT systems. By the way the list also tells you which languages account for 80% of their translation traffic. KantanMT and tauyou also started experimenting and SDL also has been experimenting for some time, BUT experiments do not a product make. And now all the major web portals are focusing on shifting to generic NMT systems as quickly as it is possible.2016 also saw the arrival of production AdaptiveMT systems. These systems though based on phrase-based SMT technology, learn rapidly and dynamically as translators work. A company called Lilt has been the first to market and current market leader with Adaptive MT, but SDL is close behind and 2017 could present quite a different picture. ModernMT, an EU based initiative has also shown prototypes of their Adaptive MT. Nobody has been able to build any real momentum with translators yet, but this is technology is a very good fit for an individual translator.

2016 also saw Microsoft introduce several speech-to-speech related MT offerings, and while this has not gotten the same publicity as NMT, these speech initiatives are a big deal in my opinion, as we all know from Star Trek that speech is the final frontier for automated translation.

The Outlook for 2017 and Beyond

Neural MT Outlook

Given the improved output quality evidence and the fact that FaceBook, SYSTRAN, Google, Microsoft, Baidu, Naver and Yandex are all exploring and deploying Neural MT, it will continue to improve and be more widely deployed. SYSTRAN will hopefully provide evidence of domain adaptation and specialization with PNMT for enterprise applications. And we will see many others try and get on the NMT wagon but I don't expect that all the MT vendors will have the resources available to get market ready solutions in place. Developing deliverable NMT products requires investment that for the moment is quite substantial and will require more than an alliance with an academic institution. However, the NMT success even at this early stage, suggest it is likely to replace SMT eventually as training and deployment costs fall or as quality differentials increase.Adaptive MT Outlook

While Phrase-Based SMT is well established now, and we have many successful enterprise applications of it, this latest variant looks quite promising. Adaptive MT continues to build momentum in the professional translation world and it is the first evolution of the basic MT technology that is getting positive reviews from both experienced and new translators. While Lilt continues to lead the market, SDL is close behind and could change the market landscape if they execute well. ModernMT may also be a player in 2017 and will supposedly create an open source version. This MT model is driven by linguistic and corrective feedback, one sentence or word at a time, and thus is especially well suited to be a preferred MT model for the professional translation industry. It can also be deployed at an enterprise level or at an individual freelancer level and I think Adaptive MT is a much better strategy than a DIY Moses approach. Both Lilt and SDL have better baselines to start with than 95% (maybe 99%) of the Moses systems out there, and together with active corrective feedback can improve rapidly enough to be useful in production translation work. Remember that a feedback system improves upon what it already knows, so the quality of the foundational MT system also really matters. I suspect in 2017 these systems will outperform NMT systems, but it would be great for someone to do a proper evaluation to better define this. I would not be surprised if this technology also supersedes the current TM technology and becomes a cloud-based Super-TM that learns and improves as you work.Understanding MT Quality

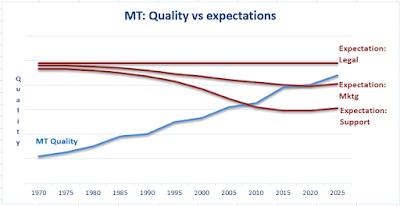

Measuring MT quality continues to be a basic challenge and the research community has been unable to come up with a better measure than BLEU. TAUS DQF is comprehensive but too expensive and complicated to deploy consistently, thus not as useful. Both Neural MT and Adaptive MT cannot really be accurately measured with BLEU, but practitioners continue to use it in spite of all it's shortcomings because of longitudinal data history. We are seeing that small BLEU differences are often seen as big improvements by humans with NMT output. Adaptive MT engines that are actively being used can have a higher BLEU every hour, and probably what matters more is how rapidly the engine improves, rather than a score at any given point. There are some in the industry who diligently collect productivity metrics and then also reference BLEU and something like Edit Distance to create an Effort Score that over time can become very meaningful and accurate measurement in a professional use context. A more comprehensive survey is something that GALA could sponsor to develop much better MT metrics. If multiple agencies collaborate and share MT and PEMT experience data we could get to a point where the numbers are much more meaningful and consistent across agencies.Post-Editing Compensation

I have noticed that a post I wrote in 2012 on PEMT compensation remains one of the most widely read posts I have ever written. PEMT compensation remains an issue that causes dissatisfaction and MT project failure to this day. Some kind of standardization is needed, to link editing and correction effort and measured MT quality in a trusted and transparent way. Practitioners need to collect and gather productivity, editing effort and automated score data to see how these align with payment practices. Again, GALA or other industry associations can collaborate to gather this data and form more standardized recommendations. As this type of effort/pay/quality data is gathered and shared, more standardized approaches will emerge and become better understood. There is a need to reduce the black art and make all the drivers of compensation clearer. PEMT compensation can become as clear as fuzzy match related compensation in time, and will require good faith negotiation in the interim while this is worked out. While MT will proliferate and indeed get better, competent translators will become a more scarce commodity and I do not buy the 2029 singularity theory of perfect MT. It would be wise for agencies to build long-term trusted relationships with translators and editors, as this is how real quality will be created now and in future.

Wishing you all a Happy, Healthy & Prosperous New Year