I recently had the opportunity to speak to a group of translators and interpreters about machine translation and how it increasingly impacts their work lives. Given that more and more agencies are using MT nowadays, it is now much more likely that a translator might be approached to do post-editing work and thus my message to the translators at the event focused on how to assess these opportunities (or hazards) and maximize the benefit of any interaction.

Translators have much more power than they realize and I predict that they will eventually learn to separate the wheat from the chaff. Translation agencies will hopefully figure out that while MT technology will proliferate, the shortage of “good” translators will only intensify in a future where global companies want to translate 10X or more the volume of information they do today.

We see many examples of MT use by agencies today but very few of these would qualify as skillful and appropriate and even fewer would be considered fair to the post-editors. It is my sense that MT technology will only offer long-term competitive advantage to those who use it with skill and real expertise and have skilled translators involved in the process. It is very easy to dump data into an instant MT portal and get some kind of an engine, but not so easy to get an engine that provides a long-term cost and efficiency production advantage.

If you are one of those translators who feels that they will NEVER do post-editing work or have decided that you simply don’t want to do it because you have plenty of “regular” work then this post will probably not be of any interest. I am one of those people who believe that MT will continue to gather momentum and that it is useful to translators to understand why and determine when to get involved or not. (And this is not just because I am involved with the sales and marketing of this technology.) It just simply makes sense at a common sense level. The first thing to understand is that all MT engines are not equal and that free online MT is not the best example of professional use of this technology even though it can be surprisingly good in some languages.

Since there is a great deal of variation in the specific MT output that translators are expected to post-edit, I think it makes sense for a translator to understand each unique opportunity as it comes along, and determine whether it is worth his/her time and engagement. Some MT opportunities can pay better than standard translation work, if the word rate to MT quality ratios are properly determined, and thus I think it makes sense for translators to understand when this is actually the case. Early experiences with incompetent or unscrupulous MT practitioners have helped PEMT work develop a reputation for being mind-numbing work that is poorly compensated. This IMO is more a reflection of the quality of these early efforts than of the real possibilities of the technology when used with expertise.

Thus I have come up with a simple checklist for a translator to evaluate a potential PEMT “opportunity” and decide whether to engage or not.

1) Compensation is linked to actual work effort

The MT output quality to word rate (financial compensation) relationship is a fundamental issue for translators. It is important to understand the “average” output quality of the MT output and then understand the effort required to fix it to target quality levels, and ensure that it is related to the compensation offered.

Since with PEMT we are generally talking about asking translators to accept a lower rate than they normally charge, it is important that there is a modicum of trust with the agency in question. This would allow a fair and reasonable rate to be established that matches the effort required to get the MT output to required target levels. This subject is dealt with in some detail here and here. The better you understand your own personal productivity with the specific MT output you are dealing with the more informed your decision will be. The specific effort level can be assessed quickly by doing a small test with a “representative sample” of a 100 or so sentences. The throughput measurements you make can then be used to extrapolate and calculate an acceptable rate.

So if your normal throughput is 2,500 words/day (313 words/hour) and you find that with the test MT output you can expect to do 5,000 words a day, it would be reasonable to accept a rate that is 60% of your normal rate and even 50% might be fair if you feel the sample is very representative and you do not mind this type of work. (I would err on the higher side as the test is only as good and representative as your test sample.)

A critical skill to develop in these scenarios is the quick assessment of the MT output quality and determine what your work throughput and thus acceptable rate is. Remember small grammar and word order errors are much easier to correct than word salad and bad and inconsistent terminology problems which require research. The rapid assessment of the quality of the MT output should be an important part of determining when a project is worth doing or not. Having a basic understanding of BLEU, Edit Distance and other methodologies is useful as this can expedite assessment of the PEMT opportunity. Asia Online offers free software to run BLEU and develop your own error classification based calculations.

Some things to be wary of include:

2) Trust and communication around technological uncertainty

I think that one of the main reasons MT has taken so long to gain momentum is the low levels of trust within the supply chain and unfortunate early experiences with MT where rates were lowered unfairly and translators were expected to bear the brunt of incompetent use of MT technology. The stakeholders all need to understand that the nature of MT requires a higher tolerance for “outcome uncertainty” than most are accustomed to. Though it is increasingly clear that domain focused systems in Romance languages are more likely to succeed with MT, it is not clear very often how good an MT engine will be a priori, and investments to measure this need to be made to get to a point to understand this.

The stakeholders all need to understand this and work together and each make concessions and contributions to make this happen in a mutually beneficial way. This is of course easier said than done as somebody has to usually put some money down to begin this process. The reward is long-term production efficiency so hopefully enterprise buyers are willing to fund this, rather than go the fast and dirty MT route as some have been doing. Agencies that are new to MT and post-editing are those most likely to get it wrong and translators should seek out agencies that are sensitive to resolving the uncertainty in a fair way.

Some specific things that translators can watch for include:

One of the common complaints about PEMT is about the drudgery of error correction work. This does suggest that not all translators want to do this kind of work or are well suited to it. Many translators are also seeking to provide feedback and steering advice to the MT system to reduce the drudgery, however, not many MT systems can properly use and leverage this type of feedback. Some like the Asia Online Language Studio are designed from the outset to utilize this type of feedback. We are seeing now that many translators do realize that MT can be an aid, much like TM, to get repetitive translation work done faster. MT offers “fuzzy matches” for each new segment that is translated through the system. Good MT systems will produce the equivalent of high quality fuzzy matches and will be much more consistent in output quality than what most of us experience with free online MT (or most DIY efforts) as shown in the second graphic above. Bad MT systems will be inconsistent, unpredictable, produce lower quality output and generally be unresponsive to any corrective feedback, especially when the practitioners are simply dumping data into an instant MT engine making portal.

The following are some characteristics of superior MT platforms:

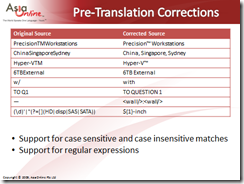

Examples of correcting problematic source text to make the post-editing task easier.

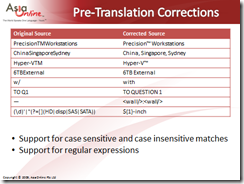

Example of using preferred terminology in the event that the original training chooses other terms.

If you have a good feeling about all three items in the list above PEMT can be just another kind of translation task and can sometimes be one that offers greater financial reward.

There have been several studies of varying quality that examine how PEMT compares with regular translation approaches and we see mixed results and often experimental bias. I just saw this study on The Efficacy of Human Post-Editing for Language Translation from Stanford that attempts to measure this in as objective manner as possible. I like that they also summarize many previous studies. Some may find fault with this one too because they use oDesk, even though these were translators who had passed a 40 question skill/competence test. IMO the study is perhaps more objective and rigorous than most I have seen from the localization community and I think it is worth noting the key findings and is worth a closer look by anybody interested this issue. They ran a carefully monitored regular vs. PEMT comparison test for 3 languages (English to Arabic, French, and German) and found the following:

If you are interested in my slides from the MiTiN presentation you can find them here.

Translators have much more power than they realize and I predict that they will eventually learn to separate the wheat from the chaff. Translation agencies will hopefully figure out that while MT technology will proliferate, the shortage of “good” translators will only intensify in a future where global companies want to translate 10X or more the volume of information they do today.

We see many examples of MT use by agencies today but very few of these would qualify as skillful and appropriate and even fewer would be considered fair to the post-editors. It is my sense that MT technology will only offer long-term competitive advantage to those who use it with skill and real expertise and have skilled translators involved in the process. It is very easy to dump data into an instant MT portal and get some kind of an engine, but not so easy to get an engine that provides a long-term cost and efficiency production advantage.

If you are one of those translators who feels that they will NEVER do post-editing work or have decided that you simply don’t want to do it because you have plenty of “regular” work then this post will probably not be of any interest. I am one of those people who believe that MT will continue to gather momentum and that it is useful to translators to understand why and determine when to get involved or not. (And this is not just because I am involved with the sales and marketing of this technology.) It just simply makes sense at a common sense level. The first thing to understand is that all MT engines are not equal and that free online MT is not the best example of professional use of this technology even though it can be surprisingly good in some languages.

Since there is a great deal of variation in the specific MT output that translators are expected to post-edit, I think it makes sense for a translator to understand each unique opportunity as it comes along, and determine whether it is worth his/her time and engagement. Some MT opportunities can pay better than standard translation work, if the word rate to MT quality ratios are properly determined, and thus I think it makes sense for translators to understand when this is actually the case. Early experiences with incompetent or unscrupulous MT practitioners have helped PEMT work develop a reputation for being mind-numbing work that is poorly compensated. This IMO is more a reflection of the quality of these early efforts than of the real possibilities of the technology when used with expertise.

Thus I have come up with a simple checklist for a translator to evaluate a potential PEMT “opportunity” and decide whether to engage or not.

1) Compensation is linked to actual work effort

The MT output quality to word rate (financial compensation) relationship is a fundamental issue for translators. It is important to understand the “average” output quality of the MT output and then understand the effort required to fix it to target quality levels, and ensure that it is related to the compensation offered.

Since with PEMT we are generally talking about asking translators to accept a lower rate than they normally charge, it is important that there is a modicum of trust with the agency in question. This would allow a fair and reasonable rate to be established that matches the effort required to get the MT output to required target levels. This subject is dealt with in some detail here and here. The better you understand your own personal productivity with the specific MT output you are dealing with the more informed your decision will be. The specific effort level can be assessed quickly by doing a small test with a “representative sample” of a 100 or so sentences. The throughput measurements you make can then be used to extrapolate and calculate an acceptable rate.

So if your normal throughput is 2,500 words/day (313 words/hour) and you find that with the test MT output you can expect to do 5,000 words a day, it would be reasonable to accept a rate that is 60% of your normal rate and even 50% might be fair if you feel the sample is very representative and you do not mind this type of work. (I would err on the higher side as the test is only as good and representative as your test sample.)

A critical skill to develop in these scenarios is the quick assessment of the MT output quality and determine what your work throughput and thus acceptable rate is. Remember small grammar and word order errors are much easier to correct than word salad and bad and inconsistent terminology problems which require research. The rapid assessment of the quality of the MT output should be an important part of determining when a project is worth doing or not. Having a basic understanding of BLEU, Edit Distance and other methodologies is useful as this can expedite assessment of the PEMT opportunity. Asia Online offers free software to run BLEU and develop your own error classification based calculations.

Some things to be wary of include:

- Agencies that establish an arbitrary lower word rate independent of language and MT output quality. This is a pretty good clue that they don’t know what they are doing and a sign that there will be dissatisfaction all around.

- Agencies using DIY MT who don’t really understand what they are doing. Expect great inconsistency and variability in the output quality and usually lower overall quality which means a greater PEMT effort.

- Agencies that have the same rate for tough languages like Japanese and easier languages like Spanish PEMT work. I would generally expect that that the effort would be greater for tough languages and so they should be paid at higher rate.

- Agencies that give you MT output that is lower in quality than you could get on your own from Google or Microsoft. This is a sign that they do not understand what they are doing.

- There are many agencies out there that have very little understanding of the complexities of MT and are only using it as a way to reduce costs. They will give you crappy output to edit and expect you to fix it for a fraction of a reasonable rate. Identify these agencies and let fellow translators know who they are. Avoid working with them.

- Hourly rates may actually be better for some kinds of MT projects where the translator is expected to only do a partial correction. Research suggests that it is very hard to define how far a partial correction goes.

2) Trust and communication around technological uncertainty

I think that one of the main reasons MT has taken so long to gain momentum is the low levels of trust within the supply chain and unfortunate early experiences with MT where rates were lowered unfairly and translators were expected to bear the brunt of incompetent use of MT technology. The stakeholders all need to understand that the nature of MT requires a higher tolerance for “outcome uncertainty” than most are accustomed to. Though it is increasingly clear that domain focused systems in Romance languages are more likely to succeed with MT, it is not clear very often how good an MT engine will be a priori, and investments to measure this need to be made to get to a point to understand this.

The stakeholders all need to understand this and work together and each make concessions and contributions to make this happen in a mutually beneficial way. This is of course easier said than done as somebody has to usually put some money down to begin this process. The reward is long-term production efficiency so hopefully enterprise buyers are willing to fund this, rather than go the fast and dirty MT route as some have been doing. Agencies that are new to MT and post-editing are those most likely to get it wrong and translators should seek out agencies that are sensitive to resolving the uncertainty in a fair way.

Some specific things that translators can watch for include:

- The quality of the dialogue and rapport with the project managers at the agency.

- Some agencies provide very clear examples of what they expect you to do with different kinds of errors. This is a good sign and helps focus the work in the most efficient way. Some like Hunnect develop an online training course for post-editors to help clarify this.

- The agencies that are willing to work with translators to deal with this technological uncertainty are the ones to focus on. Again Scott Bass from ALT provides wise words on PEMT Best Practices and provides an example of what a win-win scenario looks like.

One of the common complaints about PEMT is about the drudgery of error correction work. This does suggest that not all translators want to do this kind of work or are well suited to it. Many translators are also seeking to provide feedback and steering advice to the MT system to reduce the drudgery, however, not many MT systems can properly use and leverage this type of feedback. Some like the Asia Online Language Studio are designed from the outset to utilize this type of feedback. We are seeing now that many translators do realize that MT can be an aid, much like TM, to get repetitive translation work done faster. MT offers “fuzzy matches” for each new segment that is translated through the system. Good MT systems will produce the equivalent of high quality fuzzy matches and will be much more consistent in output quality than what most of us experience with free online MT (or most DIY efforts) as shown in the second graphic above. Bad MT systems will be inconsistent, unpredictable, produce lower quality output and generally be unresponsive to any corrective feedback, especially when the practitioners are simply dumping data into an instant MT engine making portal.

The following are some characteristics of superior MT platforms:

- The ability to provide some initial error pattern feedback to reduce mind-numbing correction work.

- Noticeable improvements in quality with relatively small amounts of corrective feedback.

- The ability to control the MT output with terminology or repetitive error pattern corrections at run time in addition to the upfront overall training, as this can greatly enhance the speed of the post-editing work.

- A defined process to take small amounts of corrective feedback to improve the engine BEFORE a production run to reduce the post-editing effort.

- The ability to control the overall linguistic style of the translations to requirements.

Examples of correcting problematic source text to make the post-editing task easier.

Example of using preferred terminology in the event that the original training chooses other terms.

If you have a good feeling about all three items in the list above PEMT can be just another kind of translation task and can sometimes be one that offers greater financial reward.

There have been several studies of varying quality that examine how PEMT compares with regular translation approaches and we see mixed results and often experimental bias. I just saw this study on The Efficacy of Human Post-Editing for Language Translation from Stanford that attempts to measure this in as objective manner as possible. I like that they also summarize many previous studies. Some may find fault with this one too because they use oDesk, even though these were translators who had passed a 40 question skill/competence test. IMO the study is perhaps more objective and rigorous than most I have seen from the localization community and I think it is worth noting the key findings and is worth a closer look by anybody interested this issue. They ran a carefully monitored regular vs. PEMT comparison test for 3 languages (English to Arabic, French, and German) and found the following:

- Most translators found the MT (Google Translate) useful and preferred it to not having a suggestion

- PEMT reduces the time taken to get the task done

- Across languages they found that the suggested translations improve final quality

- Across languages, users provided the following ranking of basic parts of speech in order of decreasing translation difficulty: Adverb, Verb, Adjective, Other, Noun.

“Our results clarify the value of post-editing: it decreases time and, surprisingly, improves quality for each language pair. Our results strongly favor the presence of machine suggestions in terms of both translation time and final quality. If translators benefit from a barebones post-editing interface, then we suspect that more interaction between the UI and MT backend could produce additional benefits.”I would love to hear what other translators may have to share about their PEMT experiences, both positive, negative and suggestions they might have to improve the process. I would like even more to hear what they think about an ideal post-editing environment or workbench and recommendations they would have.

If you are interested in my slides from the MiTiN presentation you can find them here.